说明

etc在linux系统中是配置文件目录名;etcd就是配置服务。诞生于CoreOS公司,最初用于解决集群管理系统中os升级时的分布式并发控制、配置文件的存储与分发等问题,基于此,etcd设计为提供高可用、强一致性的小型kv数据存储服务。

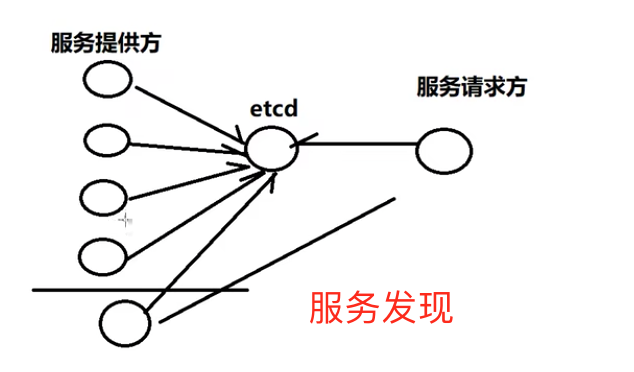

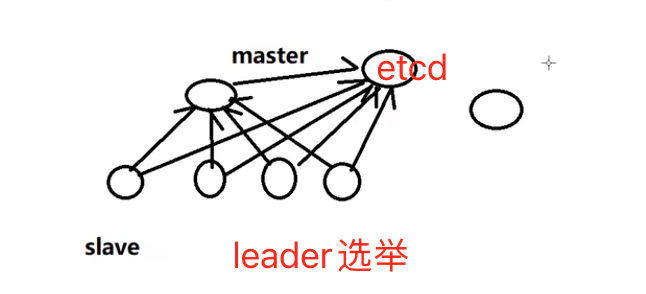

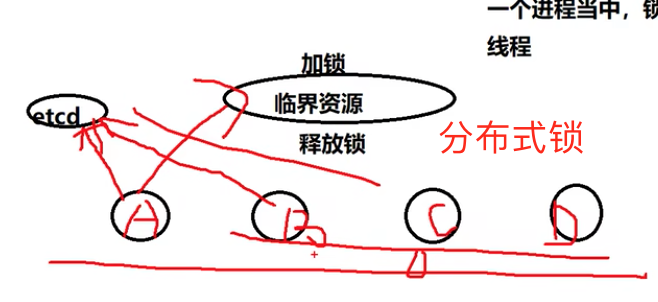

etcd基于go语言实现,主要用于共享配置、服务发现、集群监控、leader选举、分布式锁等场景,用于存储少量重要的数据。

当客户端需要调用某个服务时,它可以向服务注册中心发送查询请求,以获取特定服务的可用节点列表。然后,客户端可以选择其中一个节点进行调用。

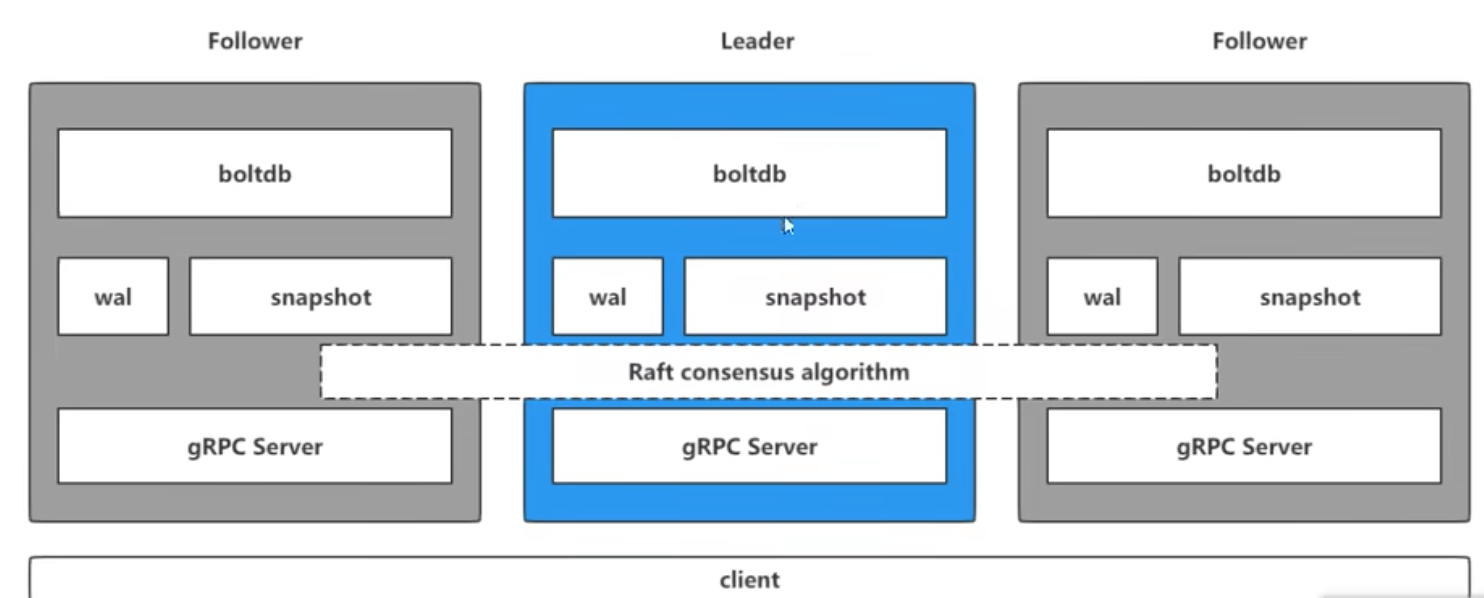

架构

1

2

3

4

5etcdctl get key -w json

{"header": {"cluster_id":1481639068965178418, "member_id":10276657743932975437, "revision": 47, "raft_term":8}, ...}

etcdctl get key --rev=47

etcdctl lease grant 30

etcdctl put key test --lease=33435

raft_term为任期,比如重启程序会重新选举,64位,全局单调递增的。revision为全局的版本号,只要etcd进行修改,版本号就会加1,64位全局单调递增。create_revision为创建key的时候的全局版本号,mod_revision为修改key的时候的全局版本号。key/value是base64编码。version为变化的次数。

内存中为B树,通过key找到revison,磁盘中为B+树,通过revison找到value。

lease租约,绑定多个key的过期信息。

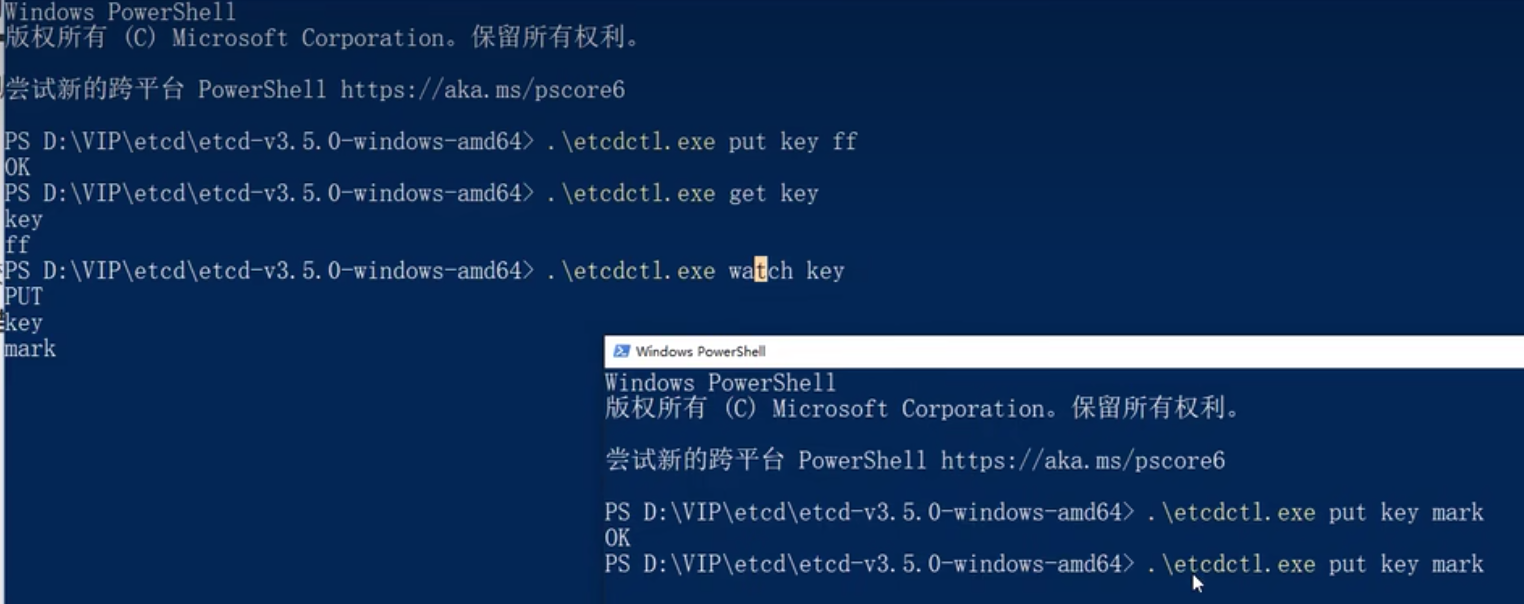

简单的kv操作:put, del, get

监听k状态变更:watch key

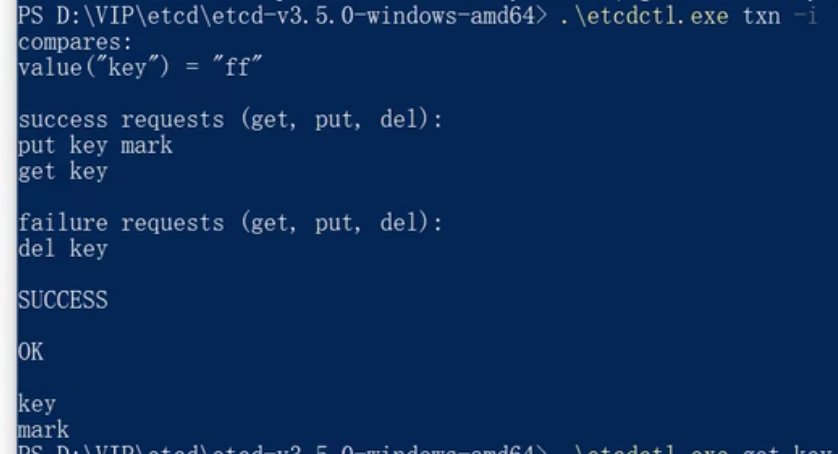

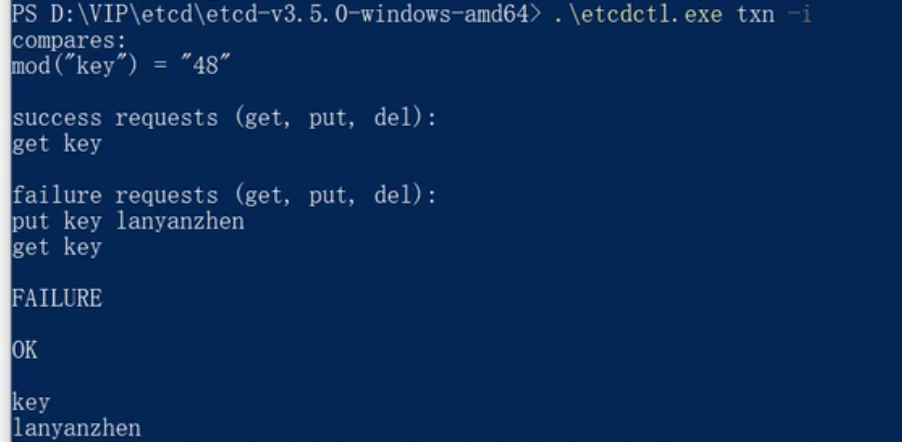

事务操作,针对key:

每个key对应一个索引,索引对应着一个b+树,存储了key历史版本信息。

- value(key) key具体值

- create(key) key的版本信息

- mod(key) key的修改版本

boltdb是一个单机的支持事务的kv存储,etcd的事务都是基于boltdb的事务实现的;boltdb为每个key都创建了索引(B+树);该B+树存储了key所对应的版本数据;

wal预写式日志实现事务日志的标准方法;执行写操作前先写日志,跟mysql的redo log类似,wal实现的是顺序写,而若按照B+树写,则涉及多次io以及随机写。

snapshot快照数据,用于其他节点同步主节点数据从而达到一致性地状态;类似redis中主从复制的数据恢复。

raft共识算法

参考:http://thesecretlivesofdata.com/raft/

All our nodes start in the follower state.

If followers don’t hear from a leader then they can become a candidate.

The candidate then requests votes from other nodes.

Nodes will reply with their vote.

The candidate becomes the leader if it gets votes from a majority of nodes.

This process is called Leader Election.

所有节点都开始处于“follower”状态。如果一个follower没有从leader那里接收到消息(选举超时过期),它将成为候选者(“candidate”)。此候选者将请求其他节点的投票,term任期加一。其他节点会回复它们的投票。如果该候选者从多数节点获得了选票,则它将成为新的leader。这个过程称为领导者选举(Leader Election)。

All changes to the system now go through the leader.

Each change is added as an entry in the node’s log.

This log entry is currently uncommitted so it won’t update the node’s value.

To commit the entry the node first replicates it to the follower nodes…

then the leader waits until a majority of nodes have written the entry.

The entry is now committed on the leader node and the node state is “5”.

The leader then notifies the followers that the entry is committed.

The cluster has now come to consensus about the system state.

This process is called Log Replication.

现在,所有对系统的更改都通过领导者进行。每个更改都作为节点日志中的一个条目添加。当前,此日志条目未提交,因此它不会更新节点的值。要提交该条目,节点首先将其复制到跟随者节点上……然后领导者等待大多数节点写入该条目。现在,在领导者节点上已经提交了该条目,并且节点状态为“5”。然后,领导者通知跟随者该条目已提交。此时,集群已达成关于系统状态的共识。这个过程叫做日志复制(Log Replication)。

Leader Election:

In Raft there are two timeout settings which control elections.

First is the election timeout.

The election timeout is the amount of time a follower waits until becoming a candidate.

The election timeout is randomized to be between 150ms and 300ms.

After the election timeout the follower becomes a candidate and starts a new election term…

…votes for itself…

…and sends out Request Vote messages to other nodes.

If the receiving node hasn’t voted yet in this term then it votes for the candidate…

…and the node resets its election timeout.

Once a candidate has a majority of votes it becomes leader.

在Raft中,有两个超时设置来控制选举。第一个是选举超时(election timeout),另一个是心跳超时,选举超时时间要长些。选举超时是follower等待成为candidate的时间量。选举超时是随机设定的,范围在150ms到300ms之间。当选举超时到达后,follower会成为candidate并开始一个新的选举任期,然后投自己一票,并向其他节点发送“请求选票”消息。如果接收方节点尚未在此任期中投票,则它将投票给该候选者,并重置其选举超时。一旦候选者获得了大多数选票,它就成为领袖(leader)。

投票规则:

- 每个人手里只有一张票

- 只有候选者才能给自己投票

- 当收到别人拉票时,只要手里有票就得给他投票

The leader begins sending out Append Entries messages to its followers.

These messages are sent in intervals specified by the heartbeat timeout.

Followers then respond to each Append Entries message.

This election term will continue until a follower stops receiving heartbeats and becomes a candidate.

Let’s stop the leader and watch a re-election happen.

Node B is now leader of term 2.

Requiring a majority of votes guarantees that only one leader can be elected per term.

If two nodes become candidates at the same time then a split vote can occur.

Let’s take a look at a split vote example…

Two nodes both start an election for the same term…

…and each reaches a single follower node before the other.

Now each candidate has 2 votes and can receive no more for this term.

The nodes will wait for a new election and try again.

Node A received a majority of votes in term 5 so it becomes leader.

领导者开始向其跟随者发送附加条目消息,这些消息按心跳超时指定的间隔发送,跟随者收到数据包时重置两个超时。然后,跟随者对每个附加条目消息进行响应。此选举任期将继续,直到一个跟随者停止接收心跳并成为候选人(选举超时也过期了)。让我们停止领导者(比如领导者宕机了),观察重新选举发生的情况。节点B现在是第2个任期的领导者。需要多数选票可以确保每个任期只能选出一个领导者。如果两个节点同时成为候选人,则可能会出现分裂投票。让我们看一个分裂投票的例子…两个节点同时开始自己同一任期的选举……并且每个候选人在另外一个节点之前达到了单个跟随者节点。现在每个候选人都获得了2票,并且不能在本任期内获得更多选票。节点将等待新的选举并重试(重置选举)。节点A在第5个任期中获得了大多数选票,因此成为领导者。

Log Replication:

Once we have a leader elected we need to replicate all changes to our system to all nodes.

This is done by using the same Append Entries message that was used for heartbeats.

Let’s walk through the process.

First a client sends a change to the leader.

The change is appended to the leader’s log…

…then the change is sent to the followers on the next heartbeat.

An entry is committed once a majority of followers acknowledge it…

…and a response is sent to the client.

Now let’s send a command to increment the value by “2”.

Our system value is now updated to “7”.

Raft can even stay consistent in the face of network partitions.

Let’s add a partition to separate A & B from C, D & E.

Because of our partition we now have two leaders in different terms.

Let’s add another client and try to update both leaders.

One client will try to set the value of node B to “3”.

Node B cannot replicate to a majority so its log entry stays uncommitted.

The other client will try to set the value of node D to “8”.

This will succeed because it can replicate to a majority.

Now let’s heal the network partition.

Node B will see the higher election term and step down.

Both nodes A & B will roll back their uncommitted entries and match the new leader’s log.

Our log is now consistent across our cluster.

一旦我们选出了一个领导者,我们需要将所有系统更改复制到所有节点中。

这是通过使用用于心跳的相同追加条目消息来完成的。

首先,客户端向领导者发送更改请求。

更改请求被附加到领导者的日志中……

然后,在下一个心跳时,更改请求被发送给跟随者。

一旦大多数跟随者确认更改请求,条目就被提交……

并向客户端发送响应。

现在让我们发送一个命令,将值增加“2”。

我们的系统值现在更新为“7”。

甚至在面对网络分区时,Raft也可以保持一致。

让我们添加一个分区,将A和B与C、D和E分开。

由于我们的分区,现在有两个处于不同任期的领导者。

让我们添加另一个客户端,并尝试更新两个领导者。

一位客户将尝试将节点B的值设置为“3”。

节点B无法复制到大多数节点(至少要三个节点,这里脑裂后总共只有两个节点了),因此其日志条目保持未提交状态。

另一个客户将尝试将节点D的值设置为“8”。

这将成功,因为它可以复制到大多数节点。

现在让我们修复网络分区。

节点B将看到更高的选举任期并下台。

节点A和B将回滚其未提交的条目并匹配新领导者的日志。

我们的日志现在在整个集群中保持一致。